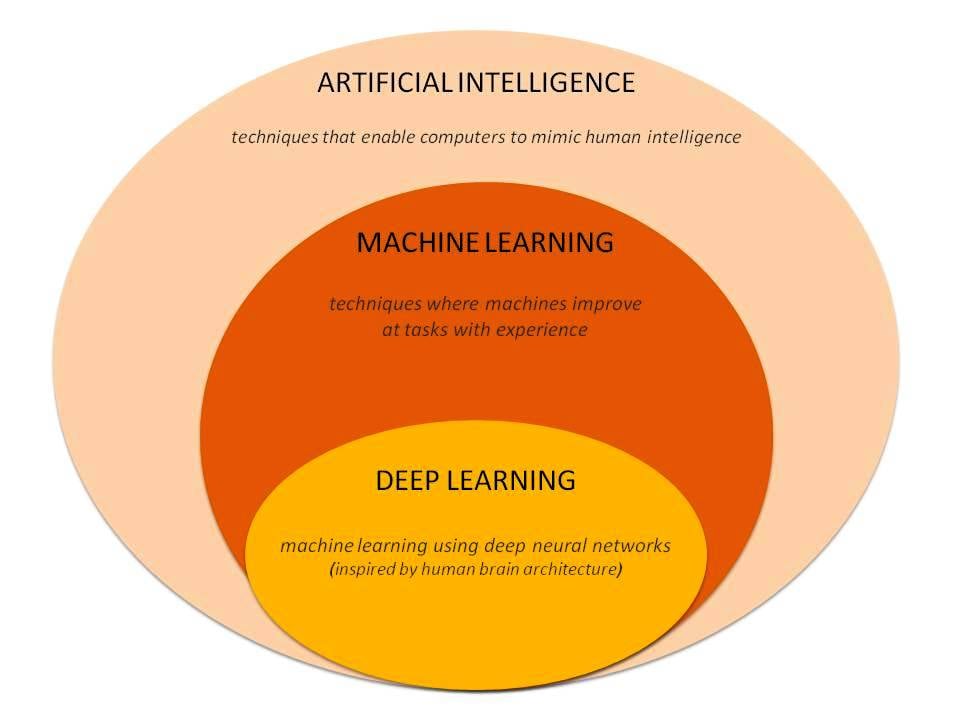

A smart algorithm has been trained on a neural network to recognize the appearance of breast cancer in MR images. The algorithm, described at the SBI/ACR Breast Imaging Symposium, used deep learning, a form of machine learning, which is a type of artificial intelligence. Image courtesy of Sarah Eskreis-Winkler, M.D.

The use of smart algorithms has the potential to make healthcare more efficient. Sarah Eskreis-Winkler, M.D., presented data that such an algorithm — trained using deep learning (DL), a type of artificial intelligence (AI) — can reliably identify breast tumors in magnetic resonance (MR) images. In doing so, the algorithm has the potential to make radiology more efficient.

On April 4, at the Society for Breast Imaging (SBI)/American College of Radiology (ACR) Breast Imaging Symposium, Eskreis-Winkler stated that the algorithm, which was trained to identify tumors in breast MR images, could save time without compromising accuracy. Deep learning, she explained in her talk, is a subset of machine learning, which is part of artificial intelligence.

“Deep learning is a new powerful technology that has the potential to help us with a wide range of imaging tasks,” said Eskreis-Winkler, radiology resident from Weill Cornell Medicine/New York-Presbyterian Hospital. In her talk at the SBI symposium, she said DL has been “shown to meet and in some cases exceed human-level performance.”

How The DL Algorithm Was Developed

Eskreis-Winkler and her colleagues used a neural network to classify segments of the MR image and to extract features. The algorithm learned to do this on its own. The use of DL eliminated the need to explicitly tell the computer exactly what to look for, she said during the presentation: “We just feed the entire image into the neural network, and the computer figures out which parts are important all by itself.”

Eskreis-Winkler, who is working toward a doctorate in MRI physics “interspersed with the residency,” outlined the development of a deep learning tool for clinical use. Initially, many batches of labeled images are fed into the neural network. When training begins, the network weights, which are used to make decisions, are randomly initialized. “So network accuracy is about as good as a coin toss,” she said.

The network, however, learns from its mistakes using a process called backpropagation, whereby wrongly categorized image results are fed backwards through the network and the decision weights are adjusted. “So the next time the network is fed a similar case, it has learned from its mistake and it gets the answer right,” said Eskreis-Winkler, who plans to be a breast imaging fellow at Memorial Sloan Kettering Cancer Center (MSKCC) after completing her Ph.D. and residency in June 2019. Work on the project was done at MSKCC, she said, with Harini Veeraraghavan, Natsuko Onishi, Shreena Shah, Meredith Sadinski, Danny Martinez, Yi Wang, Elizabeth Morris and Elizabeth Sutton.

After her symposium talk, Eskreis-Winkler told Imaging Technology News that, if integrated into the clinical workflow, the algorithm has the potential to improve the efficiency of the radiologist, “so that the tumor pops up when you open a case on PACS.“ Its use might also save time during tumor boards, she said, by automatically scrolling to breast MRI slices that show cancer lesions. This would eliminate the time otherwise spent manually scrolling to these slices.

DL Algorithm Scores in the ‘90s

The algorithm that she described at the SBI symposium processed MR images from 277 women, classifying segments within these images as either showing or not showing tumor. The algorithm achieved an accuracy of 93 percent on a test set. Sensitivity and specificity for tumor detection were 94 percent and 92 percent, respectively.

She described the results as “promising, because the dataset size we were using — about 6,000 slices — wasn’t even so big by deep learning standards. Going forward we should be able to improve our results by increasing the size of our dataset.”

DL works best when using at least 20,000 slices, Eskreis-Winkler said.

Deep learning will not provide the whole solution, she cautioned. People have to work with DL algorithms to achieve their potential.

“The way in which AI tools will be integrated into our daily practice is still uncertain,” she said in her SBI presentation. “So there is a big opportunity for us to be creative and to be proactive, to come up with ways to harness the power of AI to make us better radiologists and to better serve our patients.”

Machines make diagnostic errors, as do radiologists, Eskreis-Winkler asserted. “But they don’t make the same kinds of errors,” she told ITN. “So one of the really exciting areas is to figure out how to best combine the power of humans and machines, to push our diagnostic performance to new heights. This is an initial step in that direction.”

Greg Freiherr is a contributing editor to Imaging Technology News (ITN). Over the past three decades, he has served as business and technology editor for publications in medical imaging, as well as consulted for vendors, professional organizations, academia and financial institutions.

Related content:

Is Artificial Intelligence The Doom of Radiology?

FDA Proposes New Review Framework for AI-based Medical Devices

February 05, 2026

February 05, 2026