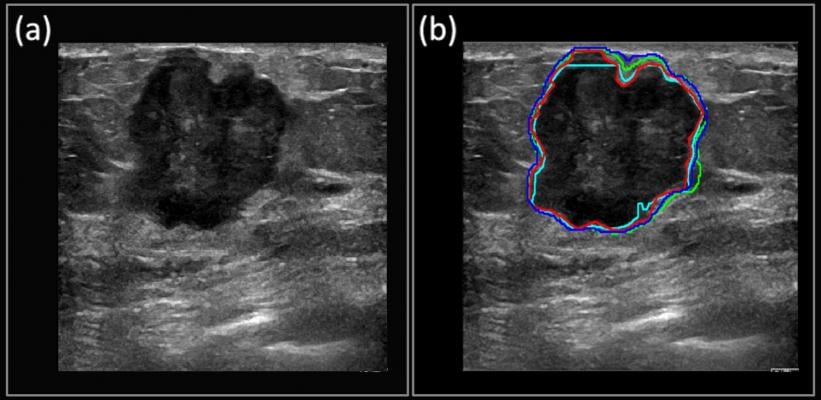

The red outline shows the manually segmented boundary of a carcinoma, while the deep learning-predicted boundaries are shown in blue, green and cyan. Copyright 2018 Kumar et al. under Creative Commons Attribution License.

June 12, 2018 — Viksit Kumar didn’t know his mother had ovarian cancer until it had reached its third stage, too late for chemotherapy to be effective. She died in a hospital in Mumbai, India, in 2006, but might have lived years longer if her cancer had been detected earlier. This knowledge ate at the mechanical engineering student, spurring him to choose a different path.

“That was one of the driving factors for me to move into the medical field,” said Kumar, now a senior research fellow at the Mayo Clinic, in Rochester, Minn. He hopes that the work his mom’s death inspired will help others to avoid her fate.

For the past few years, Kumar has been leading an effort to use GPU-powered deep learning to more accurately diagnose cancers sooner using ultrasound images. The work has focused on breast cancer (which is much more prevalent than ovarian cancer and attracts more funding), with the primary aim of enabling earlier diagnoses in developing countries, where mammograms are rare.

Into the Deep End of Deep Learning

Kumar came to this work soon after joining the Mayo Clinic. At the time, he was working with ultrasound images for diagnosing pre-term birth complications. When he noticed that ultrasounds were picking up different objects, he figured that they might be useful for classifying breast cancer images.

As he looked closer at the issue, he deduced that deep learning would be a good match. However, at the time, Kumar knew very little about deep learning. So he dove in, spending more than six months teaching himself everything he could about building and working with deep learning models.

“There was a drive behind that learning: This was a tool that could really help,” he said.

And help is needed. Breast cancer is one of the most common cancers, and one of the easiest to detect. However, in developing countries, mammogram machines are hard to find outside of large cities, primarily due to cost. As a result, healthcare providers often take a conservative approach and perform unnecessary biopsies.

Ultrasound offers a much more affordable option for far-flung facilities, which could lead to more women being referred for mammograms in large cities.

Even in developed countries, where most women have regular mammograms after the age of 40, Kumar said ultrasound could prove critical for diagnosing women who are pregnant or are planning to get pregnant, and who can’t be exposed to a mammogram’s X-rays.

Getting Better All the Time

Kumar is amazed at how far the deep learning tools have already progressed. It used to take two or three days for him to configure a system for deep learning, and now takes as little as a couple of hours.

Kumar’s team does its local processing using the TensorFlow deep learning framework container from NVIDIA GPU Cloud (NGC) on NVIDIA TITAN and GeForce GPUs. For the heaviest lifting, the work shifts to NVIDIA Tesla V100 GPUs on Amazon Web Services, using the same container from NGC.

The NGC containers are optimized to deliver maximum performance on NVIDIA Volta and Pascal architecture GPUs on-premises and in the cloud, and include everything needed to run GPU-accelerated software. And using the same container for both environments allows them to run jobs everywhere they have compute resources.

“Once we have the architecture developed and we want to iterate on the process, then we go to AWS [Amazon Web Services],” said Kumar, estimating that doing so is at least eight times faster than processing larger jobs locally, thanks to the greater number of more advanced GPUs in play.

The team currently does both training and inference on the same GPUs. Kumar said he wants to do inference on an ultrasound machine in live mode.

More Progress Coming

Kumar hopes to start applying the technique on live patient trials within the next year.

Eventually, he hopes his team’s work enables ultrasound images to be used in early detection of other cancers, such as thyroid and, naturally, ovarian cancer.

Kumar urges patience when it comes to applying AI and deep learning in the medical field. “It needs to be a mature technology before it can be accepted as a clinical standard by radiologists and sonographers,” he said.

Read Kumar’s paper, “Automated and real-time segmentation of suspicious breast masses using convolutional neural network.”

For more information: www.nvidia.com

This piece originally appeared as a blog post on NVIDIA’s website.

February 06, 2026

February 06, 2026