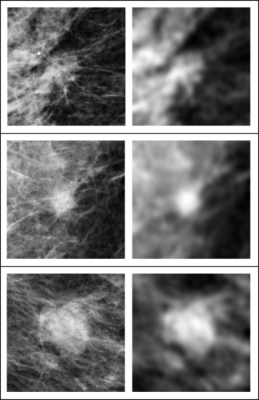

In these three examples of soft tissue lesions, the images are unperturbed on the left column and blurred on the right column. The AI system was sensitive to the blurring, while the radiologists were not. This showed that the AI system relies on details in soft tissue lesions that are considered irrelevant by the radiologists. Image courtesy of Taro Makino, NYU’s Center for Data Science

April 29, 2022 — Radiologists and artificial intelligence systems yield significant differences in breast cancer screenings, a team of researchers has found. Its work, which appears in the journal Nature Scientific Reports, reveals the potential value of using both human and AI methods in making medical diagnoses.

“While AI may offer benefits in healthcare, its decision-making is still poorly understood,” explains Taro Makino, a doctoral candidate in NYU’s Center for Data Science and the paper’s lead author. “Our findings take an important step in better comprehending how AI yields medical assessments and, with it, offer a way forward in enhancing cancer detection.”

The analysis centered on a specific AI tool: Deep neural networks (DNNs), which are layers of computing elements—“neurons”—simulated on a computer. A network of such neurons can be trained to “learn” by building many layers and configuring how calculations are performed based on data input—a process called “deep learning.”

In the Nature Scientific Reports work, the scientists compared breast-cancer screenings read by radiologists with those analyzed by DNNs.

The researchers, who also included Krzysztof Geras, Ph.D., Laura Heacock, MD, and Linda Moy, MD, faculty in NYU Grossman School of Medicine’s Department of Radiology, found that DNNs and radiologists diverged significantly in how they diagnose a category of malignant breast cancer called soft tissue lesions.

“In these breast-cancer screenings, AI systems consider tiny details in mammograms that are seen as irrelevant by radiologists,” explains Geras. “This divergence in readings must be understood and corrected before we can trust AI systems to help make life-critical medical decisions.”

More specifically, while radiologists primarily relied on brightness and shape, the DNNs used tiny details scattered across the images. These details were also concentrated outside of the regions deemed most important by radiologists.

By revealing such differences between human and machine perception in medical diagnosis, the researchers moved to close the gap between academic study and clinical practice.

“Establishing trust in DNNs for medical diagnosis centers on understanding whether and how their perception is different from that of humans,” says Moy. “With more insights into how they function, we can both better recognize the limits of DNNs and anticipate their failures.”

“The major bottleneck in moving AI systems into the clinical workflow is in understanding their decision-making and making them more robust,” adds Makino. “We see our research as advancing the precision of AI’s capabilities in making health-related assessments by illuminating, and then addressing, its current limitations.”

For more information: www.nyu.edu

Related Breast Imaging with AI Content:

AI Shows Potential in Breast Cancer Screening Programs

Artificial Intelligence Tool Improves Accuracy of Breast Cancer Imaging

February 13, 2026

February 13, 2026