Getty Images

March 28, 2024 — As artificial intelligence (AI) makes its way into cancer care – and into discussions between physicians and patients – oncologists are grappling with the ethics of its use in medical decision-making. In a recent survey by researchers at Dana-Farber Cancer Institute, more than 200 oncologists across the U.S. were in broad agreement on how AI can be responsibly integrated into some aspects of patient care, and also expressed concern about how to protect patients from hidden biases of AI.

About the AI Survey

The survey, described in a paper published on March 28 in JAMA Network Open, found that 85% of respondents said that oncologists should be able to explain how AI models work, but only 23% thought patients need the same level of understanding when considering a treatment. Just over 81% of respondents felt patients should give their consent to the use of AI tools in making treatment decisions.

When the survey asked oncologists what they would do if an AI system selected a treatment regimen different from the one they planned to recommend, the most common answer, offered by 37% of respondents, was to present both options and let the patient decide.

When asked who has responsibility for medical or legal problems arising from AI use, 91% of respondents pointed to AI developers. This was much higher than the 47% who felt the responsibility should be shared with physicians, or the 43% who felt it should be shared with hospitals.

And while 76% of respondents noted that oncologists should protect patients from biased AI tools – which reflect inequities in who is represented in medical databases – only 28% were confident that they could identify AI models that contain such bias.

"The findings provide a first look at where oncologists are in thinking about the ethical implications of AI in cancer care," says Andrew Hantel, MD, a faculty member in the Divisions of Leukemia and Population Sciences at Dana-Farber Cancer Institute who led the study with Gregory Abel, MD, MPH, a senior physician at Dana-Farber. "AI has the potential to produce major advances in cancer research and treatment, but there hasn't been a lot of education for stakeholders – the physicians and others who will use this technology – about what its adoption will mean for their practice.”

"It's critical that we assess now, in the early stages of AI's application to clinical care, how it will impact that care and what we need to do to make sure it's deployed responsibly. Oncologists need to be part of that conversation. This study seeks to begin building a bridge between the development of AI and the expectations and ethical obligations of its end-users."

Growing Uses for AI in Radiation Oncology

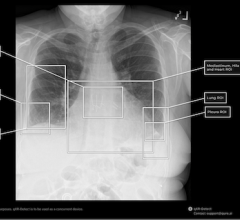

Hantel notes that AI is currently used in cancer care primarily as a diagnostic tool – for detecting tumor cells on pathology slides and identifying tumors on X rays and other radiology images. However, new AI models are being developed that can assess a patient's prognosis and may soon be able to offer treatment recommendations. This capability has raised concerns over who or what is legally responsible should an AI-recommended treatment result in harm to a patient.

"AI is not a professionally licensed medical practitioner, yet it could someday be making treatment decisions for patients," Hantel notes. "Is AI going to be its own practitioner, will it be subject to licensing, and who are the humans who could be held responsible for its recommendation? These are the kind of medico-legal issues that need to be resolved before the technology is implemented.

"Our survey found that while nearly all oncologists felt AI developers should bear some responsibility for treatment decisions generated by AI, only half felt that responsibility also rested with oncologists or hospitals,” added Hantal. “Our study gives a sense of where oncologists currently land on this and other ethical issues related to AI and, we hope, serves as a springboard for further consideration of them in the future."

Financial support for the study was provided by the National Cancer Institute of the National Institutes of Health (grants K08 CA273043 and P30 CA006516-57S2); the Dana-Farber McGraw/Patterson Research Fund for Population Sciences; and a Mark Foundation Emerging Leader Award.

For more information: https://www.dana-farber.org/

February 26, 2026

February 26, 2026