Reporting is one of the areas in medical imaging informatics that has advanced the most in recent years. With much of the imaging workflow now digitized and carried out through second-, if not third-generation image and information management systems such as picture archiving and communication systems (PACS) or radiology information systems (RIS), the ”third pillar” of the end-to-end digital imaging workflow has grown less isolated and more influential than ever before — signaling the advent of true voice-enabled and speech-driven radiology reporting.

Ongoing Market Transition to Technology-based Solutions

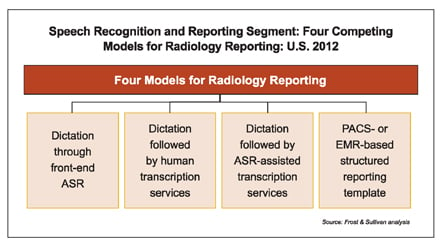

Automated speech recognition (ASR) constitutes the technology-based alternative to a more traditional, human-based service model. Rather than employing full-time medical transcriptionists as part of in-house staff or through a contract with medical transcription service organizations (MTSO), ASR allows providers to perform more of the reporting function themselves, without a huge time penalty.

By capturing the voice of an interpreting radiologist through voice dictation and then using natural language processing (NLP) algorithms to transcribe speech into text, ASR can assist radiologists in carrying out the reporting function all the way to report sign-off (front-end ASR).

In the case of back-end ASR, the same technology is used to accelerate the work of a transcriptionist, who has more of the groundwork automatically done for them so they can devote more time to doing quality assurance on final reports. As such, in some cases radiology can rely entirely on a technology-based solution for the reporting task, while in other cases there is still some reliance on transcription services. The U.S. market has gone a long way in the adoption of technology-based solutions.

Frost & Sullivan, a market research and analysis firm, estimates between one in four, and one in three imaging providers still rely entirely on the human transcriptionist model, while the majority (or between two out of three, and three out of four) use some kind of technology-based automated speech recognition solution.

Improved Technology for Higher Clinical Efficiency

Perhaps the most important “new” feature in the latest generation of ASR solutions, as far as having direct impact on imaging providers in the past year or two, is the big jump in accuracy of the latest speech engines. That is, the capabilities of these engines to accurately recognize each word a radiologist dictates at once, without the need to repeat or correct it, and regardless of speech variations such as an English accent, or background noise interference.

In fact, as the generic versions of speech engines continue to be developed for multiple industries outside of healthcare (think Apple’s “Siri” and voice-controlled car features), every two or three years we see a major migration of the healthcare-specific solutions to the latest generation of these engines.

Complementing these upgraded speech engines with ever-growing libraries of medical and radiology-specific terms, the new generation of engines essentially allows for more word recognition from the onset, and eventually increases the efficiency of the reporting process and productivity of radiologists.

The real novelty in this performance boost of the large vendors’ latest speech recognition solutions, which are now close to 99 percent accurate, lies in how they combine higher speech engine accuracy with a larger vocabulary. With techniques and algorithms borrowed from the field of artificial intelligence (AI), the solutions essentially add a layer of machine reading comprehension that takes medical imaging reporting beyond natural language processing (NLP) and into the next level of natural language understanding (NLU).

Practically, the new generation of speech solutions is able to grasp the context of a phrase beyond individual words, and use this context base to make smart suggestions, provide alerts of critical findings or highlight potential errors before final report sign-off.

From Speech Engine to Integration Platform

In the absence of large capital available to forklift upgrade imaging informatics solutions, especially in the wake of meaningful use (MU) initiatives, imaging providers are striving to improve the integration and interoperability of their current solutions.

Developing bi-directional connectivity between the speech recognition solution and PACS and/or RIS is one of those integration efforts of providers. Whether the reporting workflow is PACS-driven or RIS-driven, bi-directional connectivity with the reporting applications allows populating patient information back and forth between these systems and also presents the potential to close the loop with electronic medical records (EMR) and hospital information systems (HIS).

This bi-directional connectivity also allows the reporting solution to be the access point to imaging, such as in the case of an affiliated radiologist working from outside the facility, having only Web access to the reporting solution and wanting to access patient reports and images.

As they feed from and into multiple, sometimes disparate IT systems, speech recognition and reporting solutions are evolving from being a point solution to being a true integration engine for their overall imaging workflow. Many leading healthcare institutions are, in fact, expanding these solutions into an informatics platform to leverage clinical and business analytics and decision support.

A Platform for Business and Clinical Data Analytics

If there are two ”mega trends” in healthcare IT poised as drastic game-changers for healthcare, data analytics is one of them, along with patient engagement. However, much like patient engagement hinges on educated healthcare ”consumers,” the feasibility of data analytics in medical imaging is based on the assumption that ”clean,” structured and consistent data points exist.

Radiology is behind other areas, notably cardiology, in its adoption of structured reports and templates throughout its sub-specialties. Estimates place the current penetration level of structured reporting in radiology in the vicinity of 60 percent, but this number continues to grow gradually as there is almost never a ”way back” from shifting to structured reporting. When looking at the adoption curve so far, and given it appears to be following a top-down approach from large academic to smaller-scale providers, the adoption level can be projected as near 90 percent in the United States within five to seven years’ time.

Radiologists’ resistance to change is still slowing the adoption curve of structured reporting. The potential drawbacks in productivity and profitability caused in the short term through the learning curve are worth the investment versus continuing self-editing and self-reporting by radiologists. However, this resistance is slowly but surely being offset by the obvious benefits in the long term, notably those that stem from developing business and clinical analytics to support decisions in the enterprise.

Moving beyond rather basic dashboards that analyze HL7 messages to track relative value units (RVU) or other performance metrics such as report turnaround or emergency turnaround times, the more avant-garde providers are already making progressive use of the embedded business analytics capabilities of their speech recognition systems, of in-house developed applications as well as of third-party systems. Early adopters are using these tools to make data-driven business and clinical decisions, then analyzing the outcomes of these choices with confidence afterward.

Nadim Michel Daher is an industry principal analyst with Frost & Sullivan’s advanced medical technologies practice, specializing in medical imaging informatics and modalities. With almost a decade of industry expertise covering U.S., Middle Eastern and Canadian markets, his knowledge base covers radiology, PACS/RIS, advanced visualization, IT middleware and teleradiology, as well as CT, MRI and ultrasound technologies.

SIDEBAR:

A Quick Look at the Competition

In the past few years, the speech recognition industry witnessed an ongoing wave of consolidation, sometimes through predatory merger and acquisition activity, especially among medical transcription service organizations (MTSO), considering MedQuist’s acquisition of Spheris (April 2010) and Nuance’s acquisitions of Webmedx (July 2011) and Transcend Services (March 2012).

Any way one looks at it — by number of customers, imaging procedures or revenue — Nuance Communications is the clear market leader for technology-based automated speech recognition (ASR) in the U.S. medical imaging (and also broader healthcare) market, based on Frost & Sullivan research.

Nuance leads the market both for back-end and front-end ASR, while its closest competitor M*Modal (formerly MedQuist), the established MTSO market leader, has moved up from a distant second position in the ASR market since acquiring M*Modal in 2011, albeit while losing share to Nuance in the MTSO market due to Nuance’s recent MTSO acquisitions.

With Nuance and M*Modal established by far as the two largest industry forces, and with a fragmented industry in the smaller scale, the market is facing somewhat of a vendor duopoly, but benefiting from the high level of competition subsisting between all vendors.

In Analytics

The emergence of analytics in medical imaging is shaping up a new competitive niche in the industry poised to get more visibility and attention by both vendor and providers. Two companies can be deemed the current movers and shakers in this area, namely Medicalis and Montage Healthcare, with other notable mentions to companies such as Softek and Primordial Design. Some of these companies are already partnering with the large speech recognition vendors (such as Nuance and Montage), while the large vendors are aggressively developing their own capabilities on the analytics front.

January 14, 2026

January 14, 2026