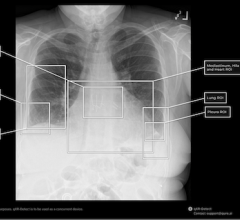

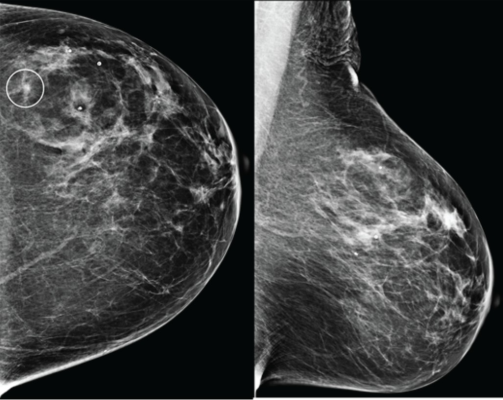

Craniocaudal (left) and mediolateral oblique (right) digital mammography images of left breast were assessed by interpreting radiologist as BI-RADS category 1, consistent with negative result. Artificial intelligence (AI) system flagged asymmetry in lateral breast on CC view (circle) and categorized examination as intermediate risk, consistent with negative or positive result depending on threshold used for categorizing AI results. Patient was not diagnosed with breast cancer within 1 year after screening examination, consistent with negative outcome according to present study’s reference standard. Thus, interpretation by radiologist was true negative and by AI system was positive if defining both intermediate-risk and elevated-risk categories as positive. Annotation was not generated by AI system but rather was recreated by present authors based on AI output coordinates.

Sept. 3, 2025 — According to ARRS’ American Journal of Roentgenology (AJR), a commercial artificial intelligence (AI) system achieved high negative predictive value (NPV) but also demonstrated higher recall rates than radiologists when applied to large digital mammography (DM) and digital breast tomosynthesis (DBT) screening cohorts.

“In our study of more than 30,000 mammographic examinations, AI reliably identified negative cases with NPV comparable to radiologists, suggesting potential to streamline workflow,” wrote senior author Hannah S. Milch, MD, from the radiology department at the University of California, Los Angeles (UCLA). “However,” she noted, “AI was also associated with more frequent false-positive results, especially in the intermediate-risk category.”

Dr. Milch and her all-UCLA team analyzed 26,693 DM and 4,824 DBT examinations from the Athena Breast Health Network between 2010 and 2019. Radiologists’ interpretations were extracted from clinical reports, while a commercially available, FDA-cleared AI system classified exams as low, intermediate, or elevated risk. Breast cancer diagnoses within one year of screening were identified via a state cancer registry.

Among DM examinations, radiologists achieved sensitivity of 88.6%, specificity of 93.3%, recall rate of 7.2%, and NPV of 99.9%. When positive results were defined as elevated risk, the AI yielded 74.4% sensitivity, 86.3% specificity, 14.0% recall rate, and 99.8% NPV. Including intermediate-risk cases as positive increased sensitivity to 94.0% but raised the recall rate to 41.8%. Similar patterns were observed in the DBT cohort.

“AI’s ability to safely triage negative screening exams could help radiologists focus their expertise on more challenging cases,” Milch et al. concluded. “Yet strategies are urgently needed to address false-positive results, particularly in intermediate-risk assessments, to avoid unnecessary recalls.”

March 06, 2026

March 06, 2026