An example of how Agfa is integrating IBM Watson into its radiology workflow. Watson reviewed the X-ray images and the image order and determined the patient had lung cancer and a cardiac history and pulled in the relevant prior exams, sections of the patient history, cardiology and oncology department information. It also pulled in recent lab values, current drugs being taken. This allows for a more complete view of the patient's condition and may aid in diagnosis or determining the next step in care.

Artificial intelligence (AI) has captured the imagination and attention of doctors over the past couple years as several companies and large research hospitals work to perfect these systems for clinical use. The first concrete examples of how AI (also called deep learning, machine learning or artificial neural networks) will help clinicians are now being commercialized. These systems may offer a paradigm shift in how clinicians work in an effort to significantly boost workflow efficiency, while at the same time improving care and patient throughput.

Today, one of the biggest problems facing physicians and clinicians in general is the overload of too much patient information to sift through. This rapid accumulation of electronic data is thanks to the advent of electronic medical records (EMRs) and the capture of all sorts of data about a patient that was not previously recorded, or at least not easily data mined. This includes imaging data, exam and procedure reports, lab values, pathology reports, waveforms, data automatically downloaded from implantable electrophysiology devices, data transferred from the imaging and diagnostics systems themselves, as well as the information entered in the EMR, admission, discharge and transfer (ADT), hospital information system (HIS) and billing software. In the next couple years there will be a further data explosion with the use of bidirectional patient portals, where patients can upload their own data and images to their EMRs. This will include images shot with their phones of things like wound site healing to reduce the need for in-person follow-up office visits. It also will include medication compliance tracking, blood pressure and weight logs, blood sugar, anticoagulant INR and other home monitoring test results, and activity tracking from apps, wearables and the evolving Internet of things (IoT) to aid in keeping patients healthy.

Physicians liken all this data to drinking from a firehose because it is overwhelming. Many say it is very difficult or impossible to go through the large volumes of data to pick out what is clinically relevant or actionable. It is easy for things to fall through the cracks or for things to be lost to patient follow-up. This issue is further compounded when you add factors like increasing patient volumes, lower reimbursements, bundled payments and the conversion from fee-for-service to a fee-for-value reimbursement system.

Where Artificial Intelligence Will Help Radiology

This is where artificial intelligence will play a key role in the next couple years. AI will not be diagnosing patients and replacing doctors — it will be augmenting their ability to find the key, relevant data they need to care for a patient and present it in a concise, easily digestible format. When a radiologist calls up a chest computed tomography (CT) scan to read, the AI will review the image and identify potential findings immediately — from the image and also by combing through the patient history related to the particular anatomy scanned. If the exam order is for chest pain, the AI system will call up:

- All the relevant data and prior exams specific to prior cardiac history;

- Pharmacy information regarding drugs specific to COPD, heart failure, coronary disease and anticoagulants;

- Prior imaging exams from any modality of the chest that may aid in diagnosis;

- Prior reports for that imaging;

- Prior thoracic or cardiac procedures;

- Recent lab results; and

- Any pathology reports that relate to specimens collected from the thorax.

Patient history from prior reports or the EMR that may be relevant to potential causes of chest pain will also be collected by the AI and displayed in brief with links to the full information (such as history of aortic aneurism, high blood pressure, coronary blockages, history of smoking, prior pulmonary embolism, cancer, implantable devices or deep vein thrombosis). This information would otherwise would take too long to collect, or its existence might not be known, by the physician so they would not have spent time looking for it.

Watch the VIDEO “Examples of Artificial Intelligence in Medical Imaging Diagnostics.” This shows an example of how AI can assess mammography images.

Watch the VIDEO “Development of Artificial Intelligence to Aid Radiology,” an interview with Mark Michalski, M.D., director of the Center for Clinical Data Science at Massachusetts General Hospital, explaining the basis of artificial intelligence in radiology.

At the 2017 Health Information and Management Systems Society (HIMSS) annual conference in February, several vendors showed some of the first concrete examples of how this type of AI works. IBM/Merge, Philips, Agfa and Siemens have already started integrating AI into their medical imaging software systems. GE showed predictive analytics software using elements of AI for the impact on imaging departments when someone calls in sick, or if patient volumes increase. Vital showed a similar work-in-progress predictive analytics software for imaging equipment utilization. Others, including several analytics companies and startups, showed software that uses AI to quickly sift through massive amounts of big data or offer immediate clinical decision support for appropriate use criteria, the best test or imaging to make a diagnosis or even offer differential diagnoses.

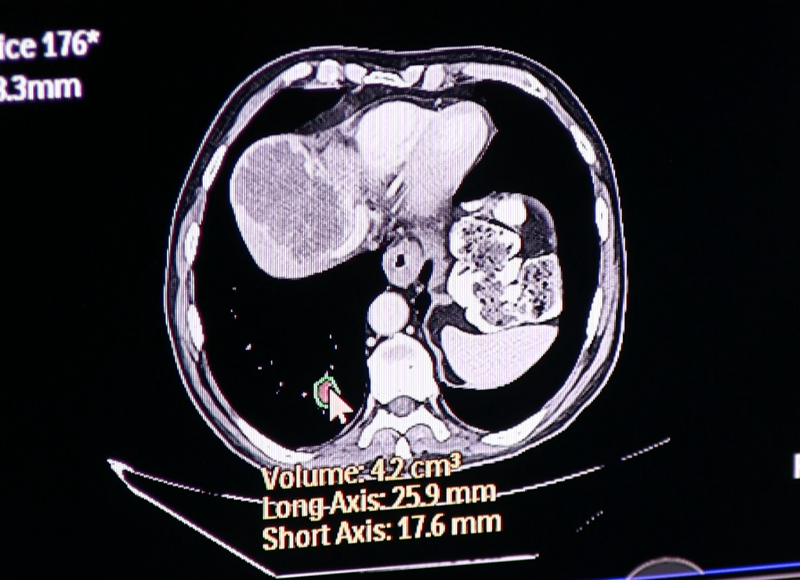

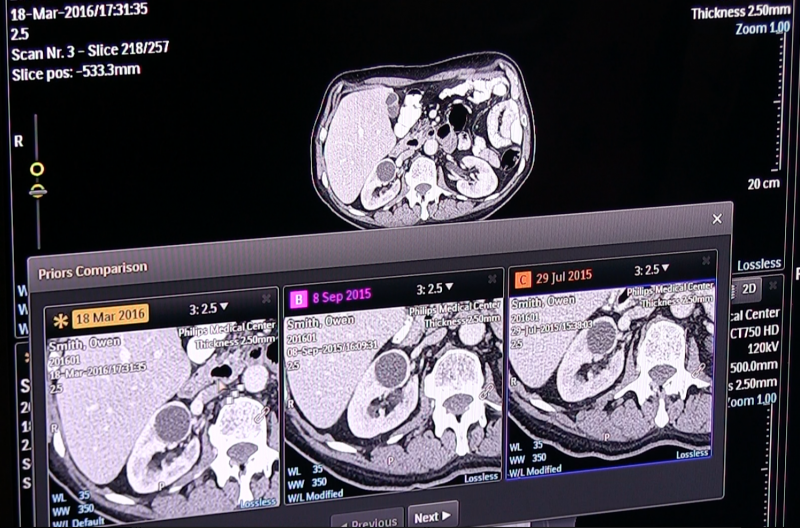

Philips uses AI as a component of its new Illumeo software with adaptive intelligence, which automatically pulls in related prior exams for radiology. The user can click on an area of the anatomy in a specific MPI view, and AI will find and open prior imaging studies to show the same anatomy, slice and orientation. For oncology imaging, with a couple clicks on the tumor in the image, the AI will perform an automated quantification and then perform the same measures on the priors, presenting a side-by-side comparison of the tumor assessment. This can significantly reduce the time involved with tumor tracking assessment and speed workflow.

AI is Elementary to Watson

IBM Watson has been cited for the past few years as being in the forefront of medical AI, but has yet to commercialize the technology. Some of the first versions of work-in-progress software were shown at HIMSS by partner vendors Agfa and Siemens. Agfa showed an impressive example of how the technology works. A digital radiography (DR) chest X-ray exam was called up and Watson reviewed the image and determined the patient had small-cell lung cancer and evidence of both lung and heart surgery. Watson then searched the picture archiving and communication system (PACS), EMR and departmental reporting systems to bring in:

- Prior chest imaging studies;

- Cardiology report information;

- Medications the patient is currently taking;

- Patient history relevant to them having COPD and a history of smoking that might relate to their current exam;

- Recent lab reports;

- Oncology patient encounters including chemotherapy; and

- Radiation therapy treatments.

When the radiologist opens the study, all this information is presented in a concise format and greatly enhances the picture of this patient’s health. Agfa said the goal is to improve the radiologist’s understanding of the patient to improve the diagnosis, therapies and resulting patient outcomes without adding more burden on the clinician.

IBM purchased Merge Healthcare in 2015 for $1 billion, partly to get an established foothold in the medical IT market. However, the purchase also gave Watson millions of radiology studies and a vast amount of existing medical record data to help train the AI in evaluating patient data and get better at reading imaging exams. IBM Watson is now licensing its software through third-party agreements with other health IT vendors. The contracts stipulate that each vendor needs to add additional value to Watson with their own programming, not just become a reseller. Probably the most important stipulation of these new contracts is that vendors also are required to share access to all the patient data and imaging studies they have access to. This allows Watson to continue to hone its clinical intelligence with millions of new patient records.

The Basics of Machine Learning in Radiology

Access to vast quantities of patient data and images is needed to feed the AI software algorithms educational materials to learn from. Sorting through massive amounts of big data is a major component of how AI learns what is important for clinicians, what data elements are related to various disease states and gains clinical understanding. It is a similar process to medical students learning the ropes, but uses much more educational input than what is comprehensible by humans. The first step in machine learning software is for it to ingest medical textbooks and care guidelines and then review examples of clinical cases. Unlike human students, the number of cases AI uses to learn numbers in the millions.

For cases where the AI did not accurately determine the disease state or found incorrect or irrelevant data, software programers go back and refine the AI algorithm iteration after iteration until the AI software gets it right in the majority of cases. In medicine, there are so many variables it is difficult to always arrive at the correct diagnosis for people or machines. However, percentage wise, experts now say AI software reading medical imaging studies can often match, or in some cases, outperform human radiologists. This is especially true for rare diseases or presentations, where a radiologist might only see a handful of such cases during their entire career. AI has the advantage of reviewing hundreds or even thousands of these rare studies from archives to become proficient at reading them and identify a proper diagnosis. Also, unlike the human mind, it always remains fresh in the computer’s mind.

AI algorithms read medical images similar to radiologists, by identifying patterns. AI systems are trained using vast numbers of exams to determine what normal anatomy looks like on scans from CT, magnetic resonance imaging (MRI), ultrasound or nuclear imaging. Then abnormal cases are used to train the eye of the AI system to identify anomalies, similar to computer-aided detection software (CAD). However, unlike CAD, which just highlights areas a radiologist may want to take a closer look at, AI software has a more analytical cognitive ability based on much more clinical data and reading experience that previous generations of CAD software. For this reason, experts who are helping develop AI for medicine often refer to the cognitive ability as “CAD that works.”

AI All Around Us and the Next Step in Radiology

Deep learning computers are already driving cars, monitoring financial data for theft, able to translate languages and recognize people’s moods based on facial recognition, said Keith Dreyer, DO, Ph.D., vice chairman of radiology computing and information sciences at Massachusetts General Hospital, Boston. He was among the key speakers at the opening session of the 2016 Radiological Society of North America (RSNA) meeting in November, where he discussed AI’s entry into medical imaging. He is also in charge of his institution’s development of its own AI system to assist physicians at Mass General.

“The data science revolution started about five years ago with the advent of IBM Watson and Google Brain,” Dreyer explained. He said the 2012 introduction of deep learning algorithms really pushed AI forward and by 2014 the scales began to tip in terms of machines reading radiology studies correctly, reaching around 95 percent accuracy.

Dreyer said AI software for imaging is not new, as most people already use it on Facebook to automatically tag friends the platform identities using facial recognition algorithms. He said training AI is a similar concept, where you can start with showing a computer photos of cats and dogs and it can be trained to determine the difference after enough images are used.

AI requires big data, massive computing power, powerful algorithms, broad investments and then a lot of translation and integration from a programming standpoint before it can be commercialized, Dreyer said.

From a radiology standpoint, he said there are two types of AI. The first type that is already starting to see U.S. Food and Drug Administration approval is for quantification AI, which only requires a 510(k) approval. AI developed for clinical interpretation will require FDA pre-market approval (PMA), which involves clinical trials.

Before machines start conducting primary or peer review reads, Dreyer said it is much more likely AI will be used to read old exams retrospectively to help hospitals find new patients for conditions the patient may not realize they have. He said about 9 million Americans qualify for low-dose CT scans to screen them for lung cancer. He said AI can be trained to search through all the prior chest CT exams on record in the health system to help identify patients that may have lung cancer. This type of retrospective screening may apply to other disease states as well, especially if the AI can pull in genomic testing results to narrow the review to patients who are predisposed to some diseases.

He said overall, AI offers a major opportunity to enhance and augment radiology reading, not to replace radiologists.

“We are focused on talking into a microphone and we are ignoring all this other data that is out there in the patient record,” Dreyer said. “We need to look at the imaging as just another source of data for the patient.” He said AI can help automate qualification and quickly pull out related patient data from the EMR that will aid diagnosis or the understanding of a patient’s condition.

Related Radiology Artificial Intelligence Content Links:

VIDEO: Technology Report — Artificial Intelligence at RSNA 2017

Read the Related article "Artificial intelligence in radiology" in the journal Nature Reviews Cancer

Key RSNA 2017 Study Presentations, Trends and Video

VIDEO "Deep Learning is Key Technology Trend at RSNA 2017"

VIDEO "How Utilization of Artificial Intelligence Will Impact Radiology" — an interview with Adam Flanders, M.D., chair of the RSNA Radiology Informatics Committee

VIDEO: Deep Learning is Key Technology Trend at RSNA 2017

VIDEO: Examples of How Artificial Intelligence Will Improve Medical Imaging

Value in Radiology Takes on Added Depth at RSNA 2017

VIDEO: Machine Learning and the Future of Radiology

VIDEO: Expanding Role for Artificial Intelligence in Medical Imaging

How Artificial Intelligence Will Change Medical Imaging

Read the blog “How Intelligent Machines Could Make a Difference in Radiology.”

April 15, 2024

April 15, 2024